Language models are trained to predict the next word—not to see or hear. But what happens to the model's representation when we ask it to imagine what its inputs look or sound like? We find that:

This effect arises without the language model ever receiving actual visual or auditory input. Our result is unlike the 'tikz unicorn' effect, where language models produce plausible text that mimics sensory descriptions.

What representations are we using?

A representation can be characterized by its kernel, described by the pairwise similarities among the vectors a neural network yields over a set of inputs. Measures of representation similarity quantify how closely the geometry of two such kernels align. The specific measure that we use is called mutual k-nearest neighbors (mutual-kNN).

Cross-modal convergence has recently been observed as models scale[1]. However, prior work treats representations, and thus alignment, as fixed properties of models. That is, alignment is currently understood to emerge passively. In our work, we demonstrate that we can steer representations at inference time. When a language model is asked to generate, each output token involves another forward pass through the network; averaging the activations across time steps yields a generative representation.

Samples of generated outputs

So, what happens to alignment when we ask the language model to imagine other modalities? Here are samples of what output tokens look like with and without sensory cues. These examples show how explicitly asking the model to 'see' or 'hear' encourages the language model to articulate a scene as if through that sense.

Note: We highlight, by hand, words that may be associated with the sensory modality.

1 of 6

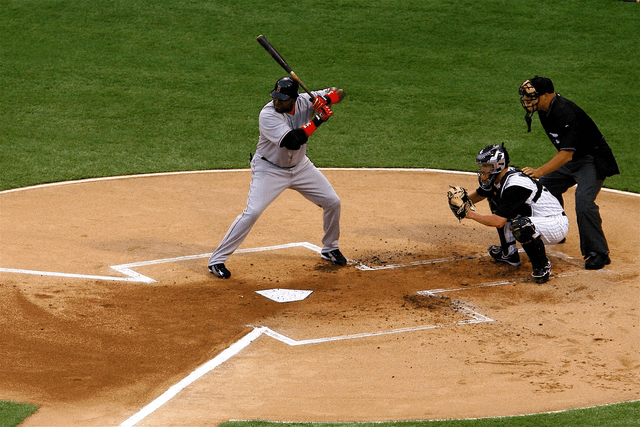

"Awaiting a pitch — batter, catcher, and umpire in baseball"

Source: Wikipedia-based Image–Text Dataset[2]

...I need to visualize a baseball field. The batter stands at the home plate, right? They're holding the bat, probably in a ready position, feet shoulder-width apart. Their body language should show they’re focused, maybe squinting at the catcher or the umpire...Sensory cues steer generative representations

While it is expected that the output follow the prompt, what's interesting is that the generative representations produced over these tokens become increasingly similar to those of the modality that match the given sensory cue. That is, a SEE cue ( or HEAR cue) increases alignment to the vision (or audio) encoder relative to the no cue baseline. See the star in the figure, which denotes matching cue-modality.

We find that this effect is specific to generative representations: appending a sensory prompt to the caption does not increase alignment for single-pass embedding representations, and in fact reduced it. Thus, the elicited higher alignment is a result of the representation formed through generation.

Visualization of mutual-kNN

Mutual-kNN is interpretable as a measure of kernel similarity. Below, we display image-caption pairs from the Wikipedia-based Image-Text dataset[2]. We visualize k nearest neighbors in the vision encoder and language model (under no cue and a visual cue). Samples are ordered by the change in mutual-kNN overlap between the language model and the vision encoder, so that examples where the SEE cue yields the largest relative increase appear first. Blue outlines indicate that the neighbor is also a neighbor in the vision encoder

References

- [1]Minyoung Huh, Brian Cheung, Tongzhou Wang, and Phillip Isola. The platonic representation hypothesis. arXiv preprint arXiv:2405.07987, 2024.

- [2]Karthik Srinivasan, Ronghang Hu, Ishan Misra, Antoine Miech, Ching-Hua Chaudhuri, Quoc Le, Rahul Sukthankar, and Cordelia Schmid. WIT: Wikipedia-based image text dataset for multimodal multilingual machine learning. ACM Multimedia, 2021.

- [3]Chris Dongjoo Kim, Byeongchang Kim, Hyunmin Lee, and Gunhee Kim. AudioCaps: Generating captions for audios in the wild. Proceedings of NAACL-HLT, 2019.

BibTeX

@article{wang2025words,

title={Words That Make Language Models Perceive},

author={Wang, Sophie L. and Isola, Phillip and Cheung, Brian},

journal={arXiv preprint arXiv:2510.02425},

year={2025},

url={https://arxiv.org/abs/2510.02425}

}